Additional Notes on Krippendorff’s Alpha

Many methodological problems in testing reliability stem from violating the requirement for coders to be truly independent, being given coding instructions they cannot follow, or applying them to data that they fail to understand.

–Klaus Krippendorff, “Content Analysis Reliability Misconceptions”

Reading the above quotation from Klaus Krippendorff, the originator of Krippendorff’s alpha, helped give substance to our impression that treating the fact checkers as coders counts as a methodological blunder.

Are the elite three mainstream fact checkers independent? They certainly say they are, but on the other hand they’re known to sometimes cite one another when fact checking the same subject matter.

Are the elite three mainstream fact checkers given coding instructions they cannot follow? That’s hard to say, given that each uses its own set of coding instructions about which we don’t know all the details. When Checking examines the consistency of the fact checkers as though measuring coder consistency, it uses its own second layer of coders to translate the fact checkers’ varied outputs into a format Checking then uses to rate consistency. In language terms, we’re reading a translation of a translation. We think that process makes the Krippendorff’s alpha scoring for the fact checkers unrealistically positive, even at the mundane .66 level.

Do the fact checkers fail to understand the data? There’s the rub. We don’t know whether the fact checkers understand the data, yet the study presumes to support the idea that the fact checkers understand the data using a tool that doesn’t work unless they understand the data.

Is a Krippendorff’s alpha of .66 good enough?

We were originally suspicious of the consistency claimed in Checking owing to the very low variation in the data. Given the predominance of false ratings, consistency over 90 percent was guaranteed. Not even sorting the results entirely by chance could have failed to reach that heady percentage. Krippendorff’s alpha, as we mentioned on Page 2, was developed partly to add needed context to such misleadingly high levels of agreement.

We’re going to show how the alpha works, with the help of Deen Freelon’s calculator.

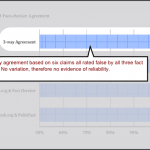

We’ll start with Checking’s comparison between FactCheck.org and the Washington Post Fact Checker. As we noted earlier, all the 15 ratings these two fact checkers had in common were false ratings. That leaves no basis for assessing coder reliability.

We put the data in a Google spreadsheet and saved it in the .csv format required by Freelon’s ReCal2 calculator. The result? “Scott’s pi, Cohen’s kappa, and Krippendorff’s Alpha are undefined for this variable due to invariant values.” This illustrates Krippendorff’s point that lack of variation makes reliability impossible to measure. We’ll go on to illustrate that low levels of variation result in poor reliability.

Let’s suppose that FactCheck.org and the Fact Checker had one of their agreements based on rating a statement true. We alter our spreadsheet by replacing the zeros on the first line with ones. We’re keeping 100 percent agreement and adding minimal variation. Krippendorff’s alpha zooms to a perfect 1.0.

Now let’s suppose FactCheck.org and the Fact Checker disagree on that one true rating. We create a another spreadsheet, duplicating the data from the first, but alter cell 1B from “1” to “0.” The result? Krippendorff’s alpha drops to zero.

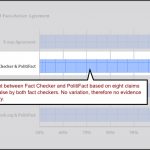

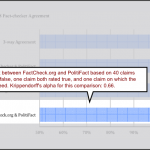

Let’s move on to the comparison between FactCheck.org and PolitiFact and do a similar exercise. Treating this comparison as we did the earlier one, we get the results Amazeen highlights: 98 percent agreement and a Krippendorff’s alpha of 0.66:

The above test consists of 40 pairs of matched false statements, one matched pair of true statements and one mismatched pair (one true, one false). Checking calls this an acceptable level of reliability.

The above test consists of 40 pairs of matched false statements, one matched pair of true statements and one mismatched pair (one true, one false). Checking calls this an acceptable level of reliability.

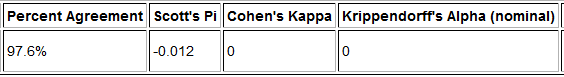

Let’s take away the lone matched pair of true statements, changing both to false statements. The agreement percentage remains the same at 97.6 percent. With the two values changed at row 37, Krippendorff’s alpha drops like a rock:

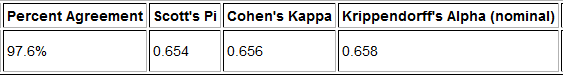

Now let’s create more variation in the data by restoring the pair of true values on row 37 and add another pair of true values on row 38. As expected, we keep the same percent agreement as in the original trial and find improved reliability according to Krippendorff’s alpha:

Now let’s create more variation in the data by restoring the pair of true values on row 37 and add another pair of true values on row 38. As expected, we keep the same percent agreement as in the original trial and find improved reliability according to Krippendorff’s alpha:

We think this shows that the level of reliability accepted in Checking comes in at one notch above complete unreliability. It’s incumbent on Checking’s author to clearly and convincingly justify accepting such low reliability.

We think this shows that the level of reliability accepted in Checking comes in at one notch above complete unreliability. It’s incumbent on Checking’s author to clearly and convincingly justify accepting such low reliability.

- 1

- 2

- 3

- 4

- 5

- 6