A May 28 press release announcing a new study of PolitiFact pushed the issue of selection bias in fact checking to the political front burner this week. The response from PolitiFact’s founder and editor, Bill Adair, provides a perfect avenue for highlighting some of the worst aspects of that fact check operation.

The study, from the Center for Media and Public Affairs at George Mason University, found that PolitiFact tends to give Republicans harsher ratings than Democrats. The CMPA’s press release summarizes the results of the study:

The study finds that PolitiFact.com has rated Republican claims as false three times as often as Democratic claims during President Obama’s second term. Republicans continue to get worse marks in recent weeks, despite controversies over Obama administration statements on Benghazi, the IRS and the AP.

The Poynter Institute, an education organization for journalists and the owner of the Tampa Bay Times and PolitiFact, put itself on the lead edge of the response, quickly obtaining Adair’s response to the study’s findings.

Tellingly, the Poynter article appears to misconstrue the meaning of the study. The CMPA’s study continues an ongoing examination of PolitiFact’s story selection and ratings. Eric Ostermeier of “Smart Politics” and the University of Minnesota conducted a broader study covering PolitiFact around 2010. Media outlets have tended to misreport both studies.

Just as tellingly, Adair’s response as quoted in the article suggests he likewise misunderstands the study’s implications:

“PolitiFact rates the factual accuracy of specific claims; we do not seek to measure which party tells more falsehoods,” PolitiFact Editor Bill Adair wrote in an email to Poynter. “The authors of this press release seem to have counted up a small number of our Truth-O-Meter ratings over a few months, and then drew their own conclusions.”

Adair went on to describe how PolitiFact’s process for creating content militates against using “Truth-O-Meter” ratings as a scientific measure of party truthfulness. But that’s exactly the importance of the studies by CMPA and Smart Politics. PolitiFact’s rating system and presentation automatically send a message about party truthfulness. As Ostermeier wrote at the time:

The question is not whether PolitiFact will ultimately convert skeptics on the right that they do not have ulterior motives in the selection of what statements are rated, but whether the organization can give a convincing argument that either a) Republicans in fact do lie much more than Democrats, or b) if they do not, that it is immaterial that PolitiFact covers political discourse with a frame that suggests this is the case.

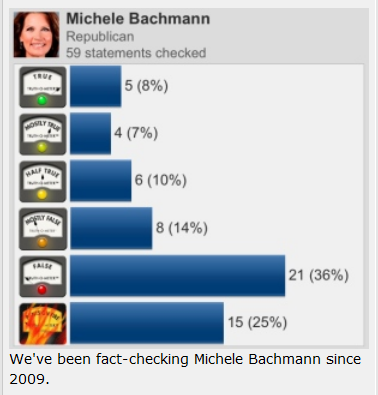

Adair denies that PolitiFact makes claims about party truthfulness, yet PolitiFact does exactly that implicitly. And PolitiFact sends similar messages more explicitly with items on individual political figures. An article from May 29 features a graphic summarizing all the ratings for Rep. Michele Bachmann, who this week announced her retirement from Congress. PolitiFact often publishes such “report cards.” But publishing candidate report cards has the same scientific basis as a judgment of party truthfulness. The sample suffers from selection bias.

PolitiFact often publishes such “report cards.” But publishing candidate report cards has the same scientific basis as a judgment of party truthfulness. The sample suffers from selection bias.

Given the fact that PolitiFact explicitly encourages readers to judge candidates according to a skewed sample and given that PolitiFact implicitly encourages readers to judge the parties according to a skewed sample, we just can’t accept Adair’s claim that PolitiFact doesn’t rate the truthfulness of the parties.

Sure, PolitiFact leaves the judgments to its readers without explaining the importance of selection bias, but it’s the type of presentation that PolitiFact might give a “Half True” if a politician was responsible for it.

Disingenuous or just slightly clueless?

We marveled at Adair’s description of selection bias when he responded to the George Mason University study. We marveled because Adair displayed no apparent understanding of selection bias in October 2011 during an interview by Gabrielle Gorder for the National Press Foundation. Gorder asked Adair how PolitiFact avoids selection bias. Adair answered by describing PolitiFact’s selection process. Adair’s answer effectively confirms that PolitiFact engages in selection bias, yet it may convey the impression to the uninformed that PolitiFact’s process somehow neutralizes selection bias. Adair should have simply answered “We don’t avoid selection bias.” If he wanted to describe the selection process after that, fine. The response he gave creates a misleading impression.

Adair quite possibly had no intention of misleading anyone. Perhaps his understanding of selection bias is simply fuzzy enough to produce sincere answers that reflect confusion. Regardless of that, it’s inexcusable for Adair to show caution about judging party truthfulness while eagerly publishing candidate report cards that rely on similarly biased samples. His position is inconsistent, as is PolitiFact’s.

PolitiFact’s response to the George Mason University study

PolitiFact responded to the George Mason University study on May 29, essentially by restating the response Adair gave to the Poynter Institute.

PolitiFact’s response, like Adair’s to Poynter, gives the surface impression that PolitiFact denies the study’s findings. But given that the study suggests PolitiFact employs selection bias in choosing stories and in reaching its rulings, PolitiFact’s response confirms, in part, what the study suggests.

Eric Ostermeier’s question still hangs. Bill Adair and PolitiFact still publish fact checks with a frame suggesting Republicans use more untruths in their public statements. Adair and PolitiFact use a soft denial of the frame harming Republicans and continue to embrace similar framing for individual politicians. The poor foundation for such framing falls well short of the best practices in fact check journalism.

Afterword

What about the new CMPA study? Does it show PolitiFact has a bias against conservatives?

So far as we can tell, nobody who has thus far responded to the press release has read the study. We count ourselves among those unable to find it. Based on the press release, however, we expect it builds a weak case showing an anti-conservative bias by PolitiFact, based on PolitiFact treating Republican statements more harshly than Democrat statements during a time when the Democratic Party faces a potentially difficult news cycle. The news cycle should produce some significant fluctuations in ratings by fact checkers. If such fluctuations show up only minimally then it makes sense to look for an explanation in the most constant factor in the ratings: the journalists doing the ratings.

We produced a study, hosted at politifactbias.com, that we think does the best job so far of using PolitiFact’s stats to show its organizational bias. PolitiFact gives researchers the best tools for such research with its elaborate rating system and large pool of stories, so we expect more of the same in the years to come.