An Honors Thesis by a Duke University economics student recently came to my attention.

Writing in 2023, Thomas A. Colicchio mathematically analyzed PolitiFact “Truth-O-Meter” ratings in an attempt to objectively measure bias. We found an error in his approach that renders a portion of his findings effectively moot. As for the rest, it helps build the case against PolitiFact’s supposed neutrality and agrees with conclusions we reached about PolitiFact Wisconsin based on our own studies of PolitiFact’s use of its “Pants on Fire” rating.

We’ll refer to Colicchio’s paper, Bias in Fact Checking?: An Analysis of Partisan Trends Using PolitiFact Data as Trends.

Improper Attention on Individual Fact Checkers

Trends draws conclusions about partisanship among individual PolitiFact fact checkers based on the “Truth-O-Meter” rating associated with their fact checks. Though Trends properly guards against charging the fact checkers with personal bias, the entire approach neglects a key aspect of PolitiFact’s workflow: The individual fact-checkers do not get the final say about the “Truth-O-Meter” rating.

Trends’ description of PolitiFact’s method gets it wrong:

This PolitiFact dataset ranges from May 2, 2007 – PolitiFact’s establishment – until the date the

scraping script was run – February 23, 2023. Each observation represents an individual fact check that

includes the date it occurred, the author of the fact check, the statement being checked, the individual or

group who made said statement, and the author’s ruling on the veracity of the statement.

PolitiFact, on a web page stating it principles, offers a different account:

The reporter who researches and writes the fact-check suggests a rating when they turn in the report to an assigning editor. The editor and reporter review the report together, typically making clarifications and adding additional details. They come to agreement on the rating. Then, the assigning editor brings the rated fact-check to two additional editors.

…

The three editors then vote on the rating (two votes carry the decision), sometimes leaving it as the reporter suggested and sometimes changing it to a different rating.

So, the multiple-editor “Star Chamber” ultimately decides the ratings. The fact checker who wrote the rating may well disagree with the rating that the editors settle on, albeit groupthink likely keeps that from routinely occurring.

This error does not negate the value of Trends except respecting the aforementioned judgments about the bias in the work of individual PolitiFact fact checkers. Those bias observations rightly accrue to whatever editors happen to make up PolitiFact “Star Chambers.” PolitiFact does not transparently identify those editors for the public, nor how they voted.

“A heterogeneity in their relative ratings of Democrats and Republicans that may suggest the presence of partisanship”

Trends identifies partisan actors, excluding statements of the political party organizations, and infers bias through an examination of story choices and ratings. Trends notes that PolitiFact mostly fact checks Republicans and gives Republicans an average rating “a little over half a category worse.”

As with Eric Ostermeier’s PolitiFact study, Trends stops short of bluntly charging PolitiFact with liberal bias. Instead, Trends uses the data to help understand Republican skepticism of PolitiFact’s reliability and recommends that the fact checkers figure out how to fix the appearance of bias.

Our favorite paragraph:

Many believe – especially due to the impact of President Trump – that Republicans should

receive worse ratings on fact checks, however, it is important to test this claim empirically, if fact

checkers genuinely want to hold political figures accountable for false statements and bolster the

American public’s media literacy. In its current form, fact checking is trusted and respected by one side

of the political spectrum while it is at best ignored and at worst ridiculed by the other side. Thus, in

order for fact checking to serve its social function to the best of its ability, it is essential to either refute

the existence of partisan bias and consider better strategies for building trust amongst Republicans or

confirm its existence and consider how these biases can be mitigated.

Trends offers readers a specific example of a fact check that might stimulate Republican distrust (coincidentally a case I highlighted at PolitiFactBias.com):

An example of a PolitiFact fact check that could leave one with the impression that the

organization is biased comes from a review of a tweet from Tammy Baldwin. The Democratic Senator

tweeted that “Latina workers make 54 cents for every dollar earned by white, non-Hispanic men,” and

PolitiFact’s D.L. Davis rates the statement as “true.” Davis admits that the research she cites to

corroborate the claim does not account for the fact that these two populations on average hold different

jobs, and that if this difference was considered, the claim would no longer hold (Davis 2022). As

Baldwin’s statement is quoted above, it is possible to believe that all she was arguing was that the

average white man earns nearly twice as much as the average Latina woman. Yet, in the next sentence of

her tweet, Baldwin asserted that “it’s past time that Latina workers are given equal pay for equal work”

(Baldwin 2022). In this way, it seems reasonable to infer that Davis – who noted that she found this

statement on Twitter – cherry-picked Baldwin’s first sentence and chose not to include the subsequent

one because doing so would alter the “true” rating per Davis’ own explanation.

“PolitiFact Bias” and Zebra Fact Check have documented similar examples by the dozen. We judge Trends makes a good point.

Correlated Findings

A number of the inferences from Trends echo those I wrote about while reporting on the ongoing “PolitiFact Bias” ‘Pants on Fire’ bias study. That latter study used a dataset akin to that used by Trends, though our study focused narrowly on PolitiFact’s apparently subjective decisions to rate statements “Pants on Fire” rather than merely “False.” The correlated inferences support our supposition that the bias findings from our study likely offer a picture of the degree of bias present in harder-to-measure areas.

PolitiFact National Bears the Bias Banner

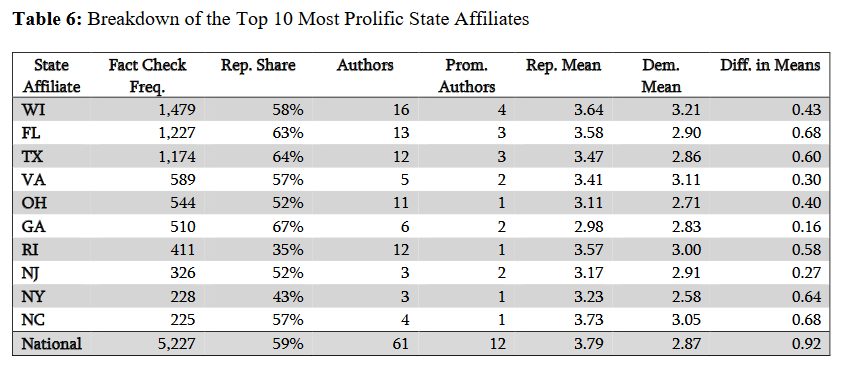

Both studies noted that the numbers from PolitiFact National run strongly against Republicans. Table 6 from Trends powerfully illustrates the point. Note the righthand column reporting the difference between the average ratings for Democrats and Republicans.

As Table 6 shows, PolitiFact National’s difference of 0.92 easily bests the 0.68 from runnersup PolitiFact Florida and PolitiFact North Carolina.

The difference for PolitiFact National represents nearly a full rating.

PolitiFact Wisconsin

Both studies use PolitiFact Wisconsin as an example of a state operation that moderates the extremes from PolitiFact National when the numbers are grouped. It’s worth noting that the “Pants on Fire” study shows the moderating effect from state operations has appeared to wane over time. Trends does not measure differences in ratings over time.

The Unstated Key Takeaway

As noted, Trends does not get to the point where it directly claims PolitiFact is politically biased. And that’s simply because finding compelling evidence in the ratings counts as a challenge. It’s hard to rule out competing hypotheses. But, returning to Table 6, these approaches that take PolitiFact’s state operations into account offer a powerful evidence that it makes a big difference who’s doing the fact-checking and affixing the “Truth-O-Meter” ratings.

Consider: What best explains the difference between PolitiFact National’s 0.92 difference between its ratings of Democrats and Republicans as opposed to PolitiFact Georgia’s 0.16 difference? Are politicians from Georgia that different from the national populations? Or does the difference stem from different tendencies in selection bias and political bias among the fact-checkers?

The latter counts as the more parsimonious explanation. We can’t accept an explanation based on “Republicans lie more” without dealing with the alternative explanation that journalists allow bias to creep into their work.

Trends contributes to the scholarship showing the need to address that alternative explanation.