If a fact checker cannot verify that something is true but finds that it might be true, does that make it half true?

And what if a fact checker runs a headline asking one question while the fact check content answers a different question?

Dark Money Paid for Over 80 Percent?

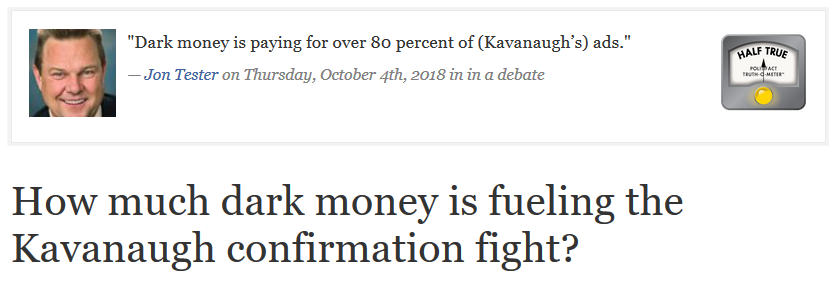

PolitiFact’s Oct. 5, 2018 fact check of a claim from Sen. Jon Tester (D-Mont.) that dark money paid for over 80 percent of the ads supporting Kavanaugh fails to either verify Tester’s claim or show the amount of money spent on such ads:

We did our best to tally up the outside spending on the Kavanaugh confirmation fight. But it’s difficult if not impossible to conclusively say how much money is involved.

Experts said dark money comprises a substantial portion of spending on the Supreme Court confirmation fight. It’s even possible dark money makes up 80 percent or more of the overall share, as Tester said. But we simply can’t be sure. Neither can Tester.

PolitiFact rated Tester’s claim “Half True,” which PolitiFact defines as “The statement is partially accurate but leaves out important details or takes things out of context.”

So PolitiFact admits it has no idea whether Tester’s claim was right but calls it partially accurate.

It concerns us when a fact checker calls a claim partially accurate without knowing whether the claim was accurate or even close to correct. It concerns us even more when the fact checker runs a misleading headline that the body of the fact check fails to adequately clarify.

Is There Any Difference Between Dark Money Ads “Fueling the Kavanaugh Confirmation Fight” and Dark Money Ads Supporting Kavanaugh?

PolitiFact’s fact check carried the headline “How much dark money is fueling the Kavanaugh confirmation fight.”

PolitiFact looked to answer that question by looking only at such ads supporting Kavanaugh. In PolitiFact’s defense, the context of Tester’s claim (which PolitiFact neglected to link from its fact check) makes clear that Tester was talking about dark money supporting Kavanaugh.

But that fails to excuse the misleading headline, and the fact check never acknowledges any dark money spending from liberal groups. The unwary reader would tend to conclude that all the dark money spending supported Kavanaugh.

Why such ambiguity from a fact checker that advertises itself as “nonpartisan”? What educational purpose does it serve to omit information about dark money spending liberals used to oppose the Kavanaugh nomination?

Addressing the Problems with PolitiFact’s Narrative

We’ve already reviewed two problems with PolitiFact’s fact check. It reached a verdict without a finding of fact and it misled readers with a one-sided account of dark money spending.

In this section we will delve more deeply into those problems and offer a more thorough fisking of Sen. Tester’s claim.

We used tools available through C-SPAN to create a video of Tester’s remarks along with the surrounding context. We sent a link to the video to the PolitiFact team responsible for the fact check (John Kruzel and Angie Drobnic Holan) in hopes PolitiFact will make good on its commitment to give readers the source material for its fact checks.

The Other Side of the Scale

PolitiFact used two sources for its examination of Kavanaugh ads. The first source was information provided by Sen. Tester’s campaign.

Tester’s campaign pointed us to a slew of announcements from eight groups that are funding pro-Kavanaugh ads.

We detected no attempt by PolitiFact to use primary sources to verify the information it received from the Tester campaign. Tester’s list did not give a total amount of spend ing for ads supporting Kavanaugh. As as a result it serves poorly to determine the percentage of spending made up of dark money.

ing for ads supporting Kavanaugh. As as a result it serves poorly to determine the percentage of spending made up of dark money.

Tester’s list named no groups opposing Kavanaugh.

The second source was the left-leaning Brennan Center for Justice’s accounting of dark money spending. PolitiFact presented its readers with a summary of those findings minus left-leaning dark money sites. On the positive side, the chart carried the apt description “Funding for pro-Kavanaugh ads.” However, as noted above, the fact check never acknowledges that liberal groups used dark money to publicize ads opposing Kavanaugh.

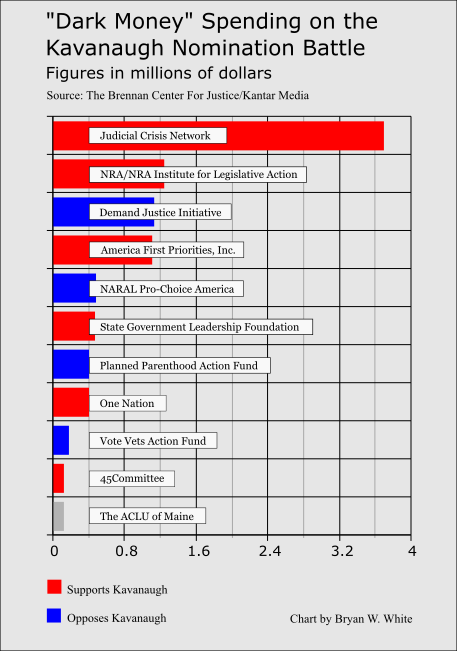

The Brennan Center data sheet clearly shows liberal groups spending dark money for anti-Kavanaugh ads. The Demand Justice Initiative came in third on the Brennan Center’s list with spending of over $1.1 million.

We created a chart (right) showing all the dark money groups from the list that spent over $100,000 on ads.

PolitiFact showed a total of $7.07 million in dark money from supporters of Kavanaugh. We added the Brennan Center totals, ending up with $2.31 million in dark money from Kavanaugh opponents. PolitiFact had to encounter this information while assessing the Brennan Center data. But PolitiFact omitted the data and framed the fact check to suggest that all dark money spending supported Kavanaugh.

Tester’s Underlying Argument

PolitiFact’s founding editor, Bill Adair, wrote that the most important aspect of a numbers claim is its underlying point.

We think PolitiFact missed Tester’s point.

PolitiFact’s analysis appears to work from the assumption that Tester was simply pointing out that quite a bit of dark money was going toward support of Kavanaugh’s nomination. But that view ignores the context of Tester’s debate remarks. Tester objected to Kavanaugh’s judicial support of dark money in politics.

“I went into the process of digging into his background to find out where he was on particular issues. I found out that he was one of the architects behind the mass surveillance from program from the federal level. I found out that he backed and supported the Patriot Act. Both of those I believe are in direct violation of the Fourth Amendment. I found out he supported dark money coming into campaigns. As anybody knows who watches TV these days, there is more dark money coming into campaigns than there should be.”

Tester intended his claim with the “80 percent” remark to tie directly to his third core objection to Kavanaugh’s nomination. But the dark money spent to aid Kavanaugh makes a poor fit for that objection because few of those ads could count as campaign ads.

That means that Tester’s “80 percent” figure serves poorly to illustrate Kavanaugh’s dark money jurisprudence. If anything, it hints at a vague conspiracy of dark money groups supporting a judge that supports their way of operating. This is the type of case PolitiFact has in the past described as “a great example of a politician using more or less accurate statistics to make a meaningless claim.” But in this case PolitiFact has no idea whether the statistic was close to correct.

Review: Where PolitiFact Went Wrong

Let’s just stick with the major stuff:

PolitiFact erred by framing the dark money spending on the Kavanaugh nomination fight as all in Kavanaugh’s favor. It ran a headline suggesting its fact check would look at the role of dark money spending then focused only on spending by conservative groups.

PolitiFact claimed to fact check the claim 80 percent of the money spent to promote Kavanaugh was dark money. PolitiFact admitted it had no estimate of the percentage and rated the 80 percent claim “Half True.”

The fact checkers ignored the way Sen. Tester used “dark money” in his argument about Judge Kavanaugh. Tester’s argument was either incoherent or suggestive of a conspiracy theory. But PolitiFact’s fact check overlooks it.

Too often mainstream fact checkers just add their own veneer of spin to political claims. The Tester fact checker serves as just one more example.

Saw this page leave a comment on another article (https://www.poynter.org/fact-checking/2018/ive-reported-on-misinformation-for-more-than-a-year-heres-what-ive-learned/), never heard of you before and decided to give a you a dry run. No research to bias me one way or the other, just read a random article (ended up being this one) to see if you are aren’t the partisan site that you were presented as. Gotta say I’m not impressed.

For starters, if every expert you interview places the error bars such that 80 million fits within them every time, no matter how huge the error bars may be, you don’t come out and say the claim is false. You say that the 80 million dollars claim is missing very important context. And pulled directly from Politifact’s website in their explanation of what ratings mean: “Half True – The statement is partially accurate but leaves out important details or takes things out of context”. Maybe there’s a criticism to be made for Politifact’s rating system being a little to simple to capture the nuance of every claim they fact check, but as far as I can tell, that’s actually the best designation they have for this claim.

Now to address your criticism of demanding balance and pointing out other sources of dark money. Politifact is a fact checker, not a news outlet. It’s not their responsibility to make a social critique on the ubiquitous nature of dark money. And while I actually agree that it’s important context for an informed opinion of the dark money supporting Kavanaugh, it’s not Politifact’s job to make that case, only to address the claim they were asked to.

You want to make that social critique and comment on how these claims were originally made based on a cherry picking of data in order to unfairly characterize Kavanaugh, then by all means do it, but its not the job of a fact checker to address the meta narrative surrounding a claim.

You be you, and be a news outlet, but don’t expect to join the fact-checking club.

I take all criticism seriously. So let’s see what you’ve got, Marshall Shoots.

PolitiFact sometimes tries to provide contextual balance and sometimes doesn’t. Do you see a problem with that type of inconsistency? We do. A recent PolitiFact fact check gave a Texas Republican a “Half True” for attributing a sentiment to Dr. Martin Luther King, Jr. The paraphrase was apparently suitably accurate. Why the downgrade? PolitiFact pointed out (for balance?) that Dr. King had made his comment originally in the context of appealing for expanded civil rights. That might imply missing context–but who doesn’t know Dr. King was a civil rights activist? That context is implied upon attributing the sentiment to King.

PolitiFact fairly often will publish information items that assign no rating at all to a claim. Why wouldn’t that count as a better option? In fact, PolitiFact often employs a dubious “burden of proof” criterion for its fact checks. If the one making the claim fails to support the claim with hard evidence PolitiFact may issue a “False” rating (or worse). We’d say that’s an inappropriate principle for fact-checking (it’s the fallacy of argumentum ad ignorantiam). And the problem is even worse if applied inconsistently.

Hilarious. PolitiFact does that kind of thing often. You haven’t noticed?

Here’s one thing we do differently than PolitiFact: We label our articles. There’s fact check content, and we report the facts and identify our assessment of the fact under a separate heading. The article you’re commenting on is labeled as “commentary.” Did you notice?

There’s certainly no danger of me joining “the fact-checking” club until I convince the club that it is wrong to exclude persons with admitted political leanings. A journalist can be a member of the club in good standing if they keep secret their history of partisan voting. Probably not so much if the secret leaks out. Do I even want to be a member of a club that values keeping the political views of its members secret? No. I’ll work to get the IFCN to drop nonsense rules like that one. And the IFCN will be better for it.

If you haven’t already, read the site description.

I should add that PolitiFact was supposedly investigating a claim about dark money fueling the nomination fight. If both sides were fighting over the nomination (they apparently were) then spending by Democrat allies in opposition to Kavanaugh is directly relevant. PolitiFact treated it as irrelevant.