The Need for Polarized Teams in Fact-Checking

Could the use of polarized teams improve political fact checking? We have noted in the past that mainstream fact checkers might easily avoid many of their mistakes by pursuing ideological diversity in their work groups.

We find it obvious that ideological diversity offers an easy way for media outlets to appeal to a broader audience. However, media ethics largely still stands by the requirement that journalists avoid letting readers know their ideology.

We hold that media secrecy on the ideology of its writers and editors chiefly serves to fool readers into believing that journalists do not have political leanings that affect what they write. And we advocate a fact-checking model that values ideological diversity: Polarized fact-checking teams will produce more accurate and more neutral content while greatly increasing transparency in comparison to popular media fact-checking models.

What Is a Polarized Fact-Checking Team?

The concept of the polarized fact-checking team is simple. Instead of a group of supposedly politically neutral fact-checkers—more likely a group of left-leaning fact-checkers—the polarized team deliberately uses a politically diverse team to choose subject matter and do fact-checking. The team might consist simply of a left-leaning editor and a right-leaning writer. With a larger staff one might create a polarized writing team and review the work using a polarized editing team. It’s that simple.

Why Is Polarized Fact-Checking Better?

Polarized fact-checking improves on the traditional models of fact-checking by offering checks on selection and confirmation biases.

Groups holding to similar ideologies (all center-left, for example) will tend to have similar opinions about what to publish. Polarized teams will tend to draw from a wider pool of story ideas and do a better job of discarding bad story ideas such as fact-checking opinions. Both tendencies should help diminish story selection bias.

We have observed many cases where confirmation bias appeared to affect the way fact checkers interpret political claims. With a group such as PolitiFact, where at least four team members likely reviewed the story before publication, a dubious interpretation may leave the strong impression that the fact-checking organization carries a bias. The polarized team, in contrast, draws on a wider set of viewpoints. Such a group will have fewer problems passing off their collective confirmation bias as non-partisan fact-checking. After all, the confirmation biases will vary with relatively little overlap on a polarized team. Internal criticism from polarized teams offers an opportunity to address legitimate objections before publication.

Triangulation: a Recognized Technique

Fact checkers have long recognized the efficacy of one aspect of polarized teams: Triangulation.

Triangulation originated as a method of improving distance estimates, used in construction, map-making and navigation. Viewing one destination from two different observation points yielded more accurate estimates of the distance involved.

Fact-checking researcher Michelle Amazeen noted a type of triangulation in the social sciences in her 2015 paper Revisiting the Epistemology of Fact-checking:

In the social sciences, triangulation refers to convergence of evidence from different methods or sources. By combining different methods of analysis, triangulation can compensate for the apparent

weaknesses of any individual approach. If these differing methods all produce similar results, our confidence in the validity of findings increases (Singleton and Straits 2005).

Polarized teams may have a built-in advantage on triangulation, in that starting on a fact check with different worldview perspectives may result in differences in interpretation and method.

PolitiFact employs a similar technique when it tries to find a consensus position among a group of experts it has interviewed for a story (from Lucas Graves’ Deciding What’s True: Fact-Checking Journalism and the New Ecology of News, Page 191) :

PolitiFact items often feature analysis from groups with opposing ideologies, a strategy sometimes described as “triangulating the truth.” Trainees were instructed to “Seek multiple sources. If you can’t get an independent source on something, go to a conservative and go to a liberal and see where they overlap.”

Beyond fact checkers’ use of triangulation, early research on polarized teams in the Wikipedia setting (The Wisdom of Polarized Crowds) suggests polarized teams produce better content:

A survey of editors validates that those who primarily edit liberal articles identify more strongly with the Democratic party and those who edit conservative ones with the Republican party. Our analysis then reveals that polarized teams—those consisting of a balanced set of politically diverse editors—create articles of higher quality than politically homogeneous teams. The effect appears most strongly in Wikipedia’s Political articles, but is also observed in Social Issues and even Science articles. Analysis of article “talk pages” reveals that politically polarized teams engage in longer, more constructive, competitive, and substantively focused but linguistically diverse debates than political moderates. More intense use of Wikipedia policies by politically diverse teams suggests institutional design principles to help unleash the power of politically polarized teams.

Why not apply the same principle to fact-checking?

Objections to Fact-Checking by Polarized Teams

We asked the Director of the International Fact-Checking Network, Alexios Mantzarlis, about fact-checking by polarized teams. We knew the IFCN favors fact-checking by politically neutral fact-checkers and expected that a model using polarized teams would test one of the IFCN’s established principles for fact-checking.

Mantzarlis expressed openness to the idea along with some skepticism. We appreciate the skeptical approach and will deal here with Mantzarlis’ objection. Note that Mantzarlis was not familiar with a proposal for the idea even as detailed as what we presented above. We simply asked him whether neutrality was better judged in the content of a fact-check than via the personal politics of the fact-checker. In that context we mentioned the concept of polarized teams while providing a link to The Wisdom of Polarized Crowds.

Fact-checking Without Representation is Tyranny

Mantzarlis predicted the model using polarized teams would suffer from excess complexity, even more so in settings outside the United States where the political landscape is more varied and less bipolar. Would the polarized teams need representatives for the Green Party or Libertarians as well as other points of view?

With apologies for our lighthearted subheading, polarized teams are not expected to provide representation for any number of political subgroups. While more varied representation on a polarized team may help further improve accuracy, we see no compelling reason to pass up the advantages of the polarized team simply because some groups may lack representation. We limit representation on polarized teams just as fact checkers limit the number and variety of experts they interview: Increasing the pool probably represents more time and effort than it is worth.

The goal is a more reliable product, not the creation of a fact-checking team that mirrors national diversity.

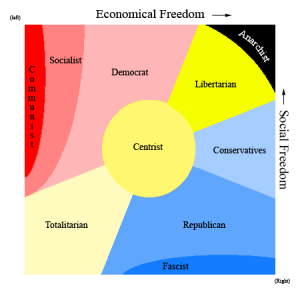

Greens and Libertarians gain if polarized teams composed of progressives and conservatives produce more accurate fact-checking than existing fact-checking models. The same principle applies where fact checkers deal with many political groups or parties. For any graphic representation of political positions, fact-checking should improve if the fact-checkers come from dissimilar locations on the chart instead of similar locations (all else being equal).

Accentuating the Positive

We do not intend the polarized team to serve as a type of politically representative body. We intend the polarized team to cut down on common types of fact-checking errors while improving journalistic transparency. Using the model of polarized teams frees media outlets from the deception of presenting journalists as politically neutral when we all know true political neutrality is next to impossible. And the new model would welcome to fact-checking a pool of capable researchers with distinct political views.

A politically polarized fact-checking team can afford greater transparency personally and organizationally than existing models. And it should result in a better finished product when applied to fact-checking. Better fact-checking along with transparent political leanings should help improve public trust in fact-checking organizations using polarized teams.

Acknowledging a Negative

Even if Mantzarlis proves incorrect in his expectation that a massively representative staff would financially burden polarized team fact-checking into oblivion, his core concern over financing carries weight with us for a different reason. Do Americans want neutral fact-checking enough to pay for it?

Partisan organizations like the left-leaning Media Matters For America and the right-leaning Media Research Center operate with budgets in the millions of dollars. Today’s U.S. gold standard for neutral fact-checking is the slightly left-leaning FactCheck.org. It operates with a budget of nearly $1 million.

Would FactCheck.org’s financial support rise or fall if it was objectively more neutral?

Is America so polarized it would reject the idea of improving fact-checking by using polarized teams?

We hope not. Visit here to support media fact-checking and accountability using polarized teams along with the other concepts we have championed here at Zebra Fact Check.

Also sign up for our mailing list.

I absolutely agree with the value in this process. My only suggestion is, in the spirit of accentuating the positive, framing it differently as either “diverse” or “bipartisan” teams.

Political polarization is, I believe, one of the biggest dangers to our republic as evidenced by growing political violence such as the shooting of Steve Scalise and the Capitol Riots. Polarization tends to create roadblocks to communication and can paralyze decision-making groups with gridlock. It’s not generally something I would play up as a feature, even though I agree 100% that everything you suggest here is definitely valuable in the process of fact-checking.

Thanks for your comments, Felix.

A negative connotation to the term “polarized” counts among the least of my concerns on this topic. The current flavor of journalism ethics calls for pretending to political neutrality. That may crumble some with the recent push to redefine objectivity in journalism to something other than its traditional objective paradigm. It’s the term used in the research I cited in support, and therefore appropriate. I might also hope that deliberately creating polarized groups and having them work together productively without killing or harming one another may serve to model a peaceful approach to polarized co-existence.

And since you blocked my replies to your Quora answer lauding Media Bias/Fact Check, I’ll say my piece here, quoting you in spots.

It’s unlikely MB/FC uses random sampling. As I pointed out, an important step like that would warrant mention in the statement on methodology and it just isn’t there. As for my “submissions” I do not know what you’re talking about. I write what I write at Zebra Fact Check. What I do, AFAICT, has nothing to do with how MB/FC samples the site. I don’t send articles to MB/FC for consideration, for example. I don’t waste my time on that site because I consider it a sideshow. If MB/FC acquired a contaminated sampling of my writing, that’s all on them. So you think they blew the evaluation of ZFC but that doesn’t count against their reliability? You never really addressed that question (among others).

So it’s a sin to tweak the analogy to make it more closely match that which it was intended to illustrate and explain? That’s funny. If my modifications somehow failed to bring the analogy closer to the situation it was supposed to illuminate, that’s a complaint worth making.

No, I don’t see why. The potential for bias does not justify ignoring proffered complaints from any source. That’s an ad hominem fallacy. There’s a reason it’s a fallacy. Courts do have special rules regarding testimony from spouses, IIRC, but that’s not really the same thing and it doesn’t stop being a fallacy just because it happens in court.

You apparently justified your decision to block my replies based on my supposed failure to answer this question from you:

You were referring to the (misleading) framing your gave for my use of ZFC and PF to illustrate the inconsistency of process at MB/FC. But it should have been obvious that I explained that coherently: It’s a problem for MB/FC’s reliability if it gives ZFC the same grade it gives PF for reliability if the former is criticizing the accuracy of the latter. Maybe you’ll surprise me one day by acknowledging the point instead of trying to hocus-pocus it out of existence with vague references to selection bias.

In a response to another Quoran’s answer to the same question, MB/FC Dave himself offered a few wise words. At least I find them so:

It’s a category error to defend subjective ratings as “reliable” in the sense apparently intended in the original question. That’s why your reasons for calling MB/FC reliable could never add up. You ended up obfuscating by drawing an art/science distinction, but the key element is actually logic, with its companion reason. And subjectivity works no better on logic than it does on science. There’s ultimately no reason to regard MB/FC as reliable that is free of logical fallacy.